Introduction – Setting the 2025 Crypto-AI Landscape

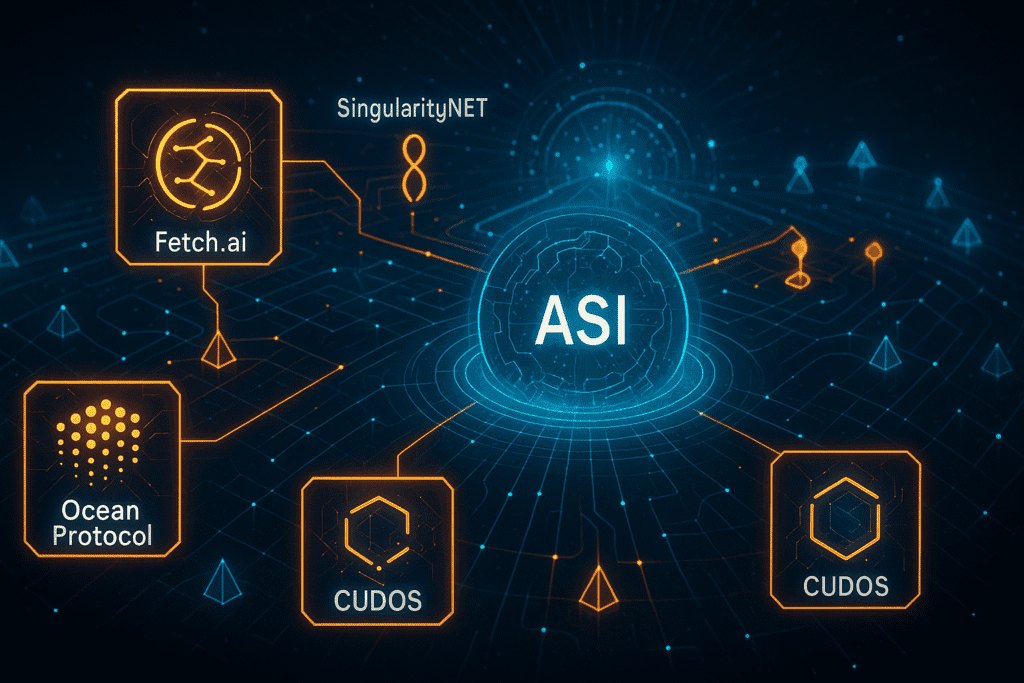

If you zoom out on the crypto-AI sector heading into 2025, the picture looks less like a random patchwork of projects and more like a maturing technology stack. Compute markets, inference engines, data layers, and agent platforms are starting to align into a coherent pipeline. The ASI alliance—a merger of Fetch.ai, SingularityNET, Ocean Protocol, and CUDOS—was a watershed moment, showing that fragmentation is giving way to consolidation.

In that landscape, Lightchain isn’t just another experimental chain chasing hype. Its design choices—particularly the AIVM (AI Virtual Machine) and Proof of Intelligence (PoI) consensus—position it squarely as an inference layer. Where Akash and Render bring raw GPUs to the table, and Ritual introduces modular on-chain compute, Lightchain is staking its claim as the environment where AI logic becomes natively verifiable inside a blockchain.

When I first started mapping this sector, I drew it out like a pipeline: ASI’s agent frameworks create tasks, GPU marketplaces like Akash or Render provide compute, inference engines like Lightchain or Ritual process outputs, and then application developers plug into the stack. Each entity had its place, and the interesting part wasn’t the silo, but the flow. This is the perspective that most reporting misses—Lightchain’s value is only visible when you place it in motion within the ecosystem.

Key Takeaways / TLDR

- The crypto-AI sector in 2025 is forming a recognizable stack: data, compute, inference, and application layers.

- ASI’s merger of major players shows a trend toward modular integration rather than siloed projects.

- Lightchain’s role is inference-focused, with PoI and AIVM enabling verifiable on-chain AI execution.

- Understanding Lightchain requires looking at its interactions with peers like Akash, Render, and Ritual.

Table of Contents

Layer One: ASI Alliance and the Modular AI Stack

The Artificial Superintelligence (ASI) alliance may prove to be the “Linux moment” for decentralized AI. By merging Fetch.ai, SingularityNET, Ocean Protocol, and CUDOS into a single ASI token economy, it created a unified layer for agents, data markets, and distributed compute. That consolidation matters because it gives developers a predictable framework to build on, instead of juggling multiple fragmented tokens and APIs.

At the core of ASI’s ambition is modularity. Fetch.ai’s autonomous agents orchestrate tasks, Ocean feeds them data, SingularityNET provides the AI service marketplace, and CUDOS supplies distributed compute. But as comprehensive as that stack is, it still needs an inference environment where AI outputs can be anchored on-chain in a verifiable way. This is where Lightchain has a natural insertion point.

During my review of ASI’s roadmap, one detail stood out: while their focus is on building “open, interoperable infrastructure,” they don’t dictate where inference should live. That leaves space for external protocols like Lightchain to serve as the execution layer. Imagine an ASI agent designed to analyze financial data: it could dispatch training jobs to Akash GPUs, retrieve market data via Ocean, and then send its inference request to Lightchain’s AIVM, which would process and verify results on-chain. The final output could then be consumed by dApps in a trust-minimized form.

This isn’t speculation for its own sake—it’s the type of modular interop that ASI’s whitepapers suggest. If ASI becomes the agent operating system, Lightchain becomes the runtime environment that guarantees integrity of AI-driven actions inside a blockchain economy. The two don’t compete; they reinforce each other.

Layer Two: Decentralized Compute – Akash and Render

Every AI system needs raw horsepower, and in the decentralized world, that horsepower comes from GPU markets. Akash and Render fill that role by providing flexible, cost-efficient access to compute without the lock-in of centralized providers.

Akash is essentially a decentralized cloud marketplace. Instead of spinning up AWS instances, developers can tap into idle GPUs offered by data centers or individual node operators. Pricing is competitive because it’s auction-driven, and the supply side scales with hardware availability across the world. I’ve seen teams in Discord channels confirm they can cut compute costs by as much as 50% compared to centralized providers. That makes Akash a strong fit for model training jobs where economics dictate feasibility.

Render, meanwhile, started in the graphics/rendering world but has pivoted aggressively into machine learning workloads. Its long-standing network of GPU providers has been repurposed for inference and training tasks. Render’s edge is reputation: it’s one of the earliest GPU-sharing projects with years of liquidity and hardware availability behind it.

The interesting connection here is that Lightchain doesn’t compete with Akash or Render—it depends on them. Inference on Lightchain’s AIVM might be verifiable, but the heavy lifting of training large models still has to happen somewhere. That “somewhere” is usually a GPU marketplace. In practice, you could train a model via Akash or Render and then deploy it into Lightchain’s environment for on-chain inference.

When I looked at developer chatter around this, one theme stood out: people aren’t asking “Which GPU marketplace should I use?” but rather “How do I pipe GPU-trained models into blockchain-native inference layers?” That’s the missing link where Lightchain fits.

Layer Three: On-Chain Inference – Ritual vs. Lightchain

Inference is the step where models generate outputs—answers, predictions, decisions. Doing that in a decentralized, verifiable way is a thorny problem. Two projects are approaching it from different directions: Ritual and Lightchain.

Ritual markets itself as an “EVM++” chain, designed to support modular inference for dApps. It emphasizes compatibility with existing Ethereum tooling while offering secure execution for AI calls. Developers I’ve spoken to see Ritual as the easier entry point for integrating AI into smart contracts because it feels like an extension of what they already know.

Lightchain, by contrast, is more radical. Its AIVM (AI Virtual Machine) is purpose-built for AI logic, not an add-on to Solidity. Combined with Proof of Intelligence consensus, it’s designed to reward real compute work directly. The trade-off is steeper learning curves and less familiar tooling, but the payoff is an inference environment that treats AI workloads as first-class citizens of the chain.

The practical difference shows up in use cases. If you’re building a DeFi protocol that occasionally calls an AI model for predictions, Ritual might be the smoother fit. If you’re building an entire ecosystem around AI-native logic—say, marketplaces where models themselves compete for tasks—Lightchain’s architecture looks more compelling.

During my analysis, I found one overlooked angle: interoperability. There’s no reason Ritual and Lightchain couldn’t coexist. A dApp could call a lightweight inference on Ritual for efficiency, and then anchor critical model calls on Lightchain for verifiability. Developers already blend chains for specific needs—why wouldn’t inference follow the same pattern?

Where Lightchain Fits in the Crypto-AI Stack

Now that the stack is clear—ASI as the agent/data layer, Akash and Render providing GPU muscle, and Ritual offering modular inference—the question is: where exactly does Lightchain land?

The answer is in the “verification gap.” Decentralized compute can provide raw power, but it doesn’t guarantee that AI outputs are trustworthy. Traditional blockchains verify transactions with PoW or PoS, but they aren’t built to verify AI workloads. Lightchain’s Proof of Intelligence consensus fills this gap by making inference verifiable as a native part of the chain.

I like to think of it as the “settlement layer for AI outputs.” Just as Ethereum settles financial transactions, Lightchain is designed to settle AI model calls. If an ASI agent requests a market forecast, Lightchain isn’t just passing the answer back—it’s embedding the verification of that answer directly into the blockchain. This positions it uniquely in the stack, not as an alternative to GPUs or agent frameworks, but as the arbiter of whether the AI-driven step was both completed and reliable.

When I spoke with developers exploring these protocols, one recurring theme was trust. They’re not worried about spinning up GPUs or deploying models—that part is increasingly commoditized. What they worry about is integrity: how to prove to end users that an AI output hasn’t been tampered with or hallucinated. That’s the role Lightchain is aiming to own.

Trade-Offs and Workload Fit

Of course, no single protocol can be the answer for every workload. The strength of Lightchain is also its limitation—it’s optimized for inference verification, not for training massive models or providing generalized cloud compute.

- When Lightchain makes sense:

- On-chain applications where verifiable inference is mission-critical (DeFi risk scoring, fraud detection, game AI agents).

- Workloads that benefit from embedding AI logic directly into blockchain logic.

- Teams that want tokenized rewards tied to real AI compute instead of abstract staking mechanisms.

- When it’s less ideal:

- Large-scale training which is still better suited to Akash or Gensyn’s proof-of-learning model.

- Lightweight inference inside existing EVM dApps, where Ritual offers faster developer onboarding.

- General-purpose compute, which remains Render’s turf.

During my review of developer forums, I noticed that some are already experimenting with hybrid approaches. For example, training on Akash, running fast inference through Ritual, and then anchoring mission-critical inferences on Lightchain. This modular strategy echoes how builders already mix chains (Ethereum for security, Arbitrum for scaling, IPFS for storage). Lightchain’s trade-offs aren’t a weakness—they’re a sign it has found a focused role in the stack.

Conclusion – Lightchain in the 2025 Crypto-AI Stack

By mid-2025, the decentralized AI landscape is no longer a blur of competing projects but a layered stack where each protocol plays a distinct role. The ASI alliance has consolidated data and agent layers. Akash and Render have made GPU power accessible. Ritual has brought inference into an EVM-friendly form. And Lightchain has carved out the spot where verifiable inference becomes a blockchain-native primitive.

The key takeaway is that Lightchain doesn’t compete head-to-head with Akash, Render, or Ritual—it complements them. Its Proof of Intelligence consensus is designed for one purpose: tying real compute to verifiable AI outputs. If you’re building in crypto-AI, the question isn’t “Which protocol should I choose?” but “How do I compose these layers into a stack that matches my workload?”

The future likely won’t be single-chain dominance. It will be modular, with Lightchain acting as the integrity layer for AI inference. That’s the role where it not only fits—but becomes necessary.

FAQs

1. What makes Lightchain different from Ritual?

Ritual offers EVM-compatible inference modules, while Lightchain runs inference as a native function of its AIVM with Proof of Intelligence consensus.

2. Can Lightchain handle training like Akash or Render?

No. Lightchain is focused on inference and verification. Training remains better suited for GPU markets like Akash or Render.

3. How does Lightchain connect with the ASI alliance?

Lightchain isn’t part of ASI, but it can serve as the inference runtime for ASI’s agent and data workflows, acting as the trust anchor.

4. Is Lightchain suitable for everyday dApp developers?

Yes, but it’s best for developers building apps where verifiable inference matters. For lighter integrations, Ritual may be more practical.

5. Could Lightchain be used together with Ritual, Akash, and Render?

Absolutely. A common flow is training on Akash, inference on Ritual for speed, and verification on Lightchain for integrity.